Fragmentation on Mac OS X: Time for a Change

Windows systems suffer from file system fragmentation and benefit from defragmentation. Windows 7 includes a defragmenter (as did previous versions of Windows) and automatically defragment the file system from time to time.

The Mac takes a different approach. There are quite a few mechanisms built into the HFS+ file system to keep the system working smoothly (check out this post for some details), but the most interesting one in terms of fragmentation is on the fly defragmentation.

In a nutshell, when any file under 20mb in size is opened, the system checks to see if it's fragmented. If it is, and some other conditions are met, then the file is automatically, instantly defragmented.

This works great for keeping those "small" files defragmented, but back when this feature was first added to HFS+ about 8 years ago, a 20mb file was pretty large. Not so anymore.

Another change in the demands on the file system is the advent of iTunes, and large media files. It's not uncommon today to be downloading podcasts that are more than 20mb, in addition to video podcasts that are hundreds of megabytes, and movies that are a gigabyte or more. It's also quite easy with iTunes to fill your hard drive with downloaded media.

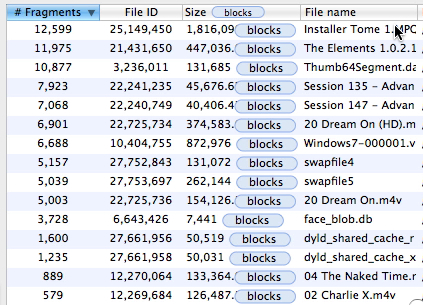

Check out this fragmentation report of the hard drive in my laptop:

That 1st file, a recent download, is split into 12,599 fragments. To put that in perspective, the drive I have in that laptop has a random seek time of about 12ms. 12ms x 12599 is 151 seconds. That's how much time the drive could spend just seeking to read this one file!

The 2nd file is an iPad application. The 4th, 5th, and 6th files are also iTunes downloads. In fact, most of the fragmented files are.

There were only a couple of files under 20mb that had any fragmentation at all, so the auto-defrag is doing its job. But 20mb is too small a size for automatic defragmentation in 2010. In fact, as far as I'm concerned, any attempt to open a fragmented file, no matter how big it is, should be considered - if not for instant defragmentation, then for being added to a queue of files scheduled for background defragmentation. But even if that's out of the picture, the 20mb size should be increased respective to the size and performance increase of computers since 2003, to at least 200mb.

(It's not configurable - you can see in the source here that it's a constant (20 * 1024 * 1024).